The AI Regulatory Landscape: U.S., EU, China, and Russia

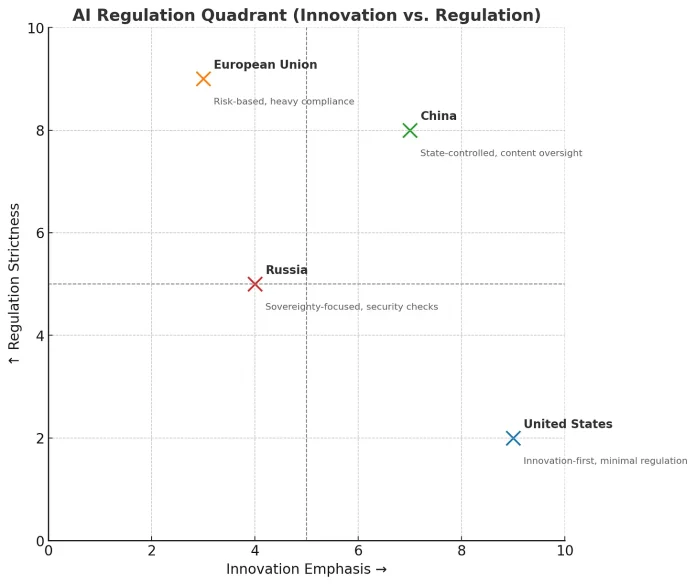

The world of Artificial Intelligence is increasingly shaped by how governments choose to regulate it. The U.S. and China have rolled out new frameworks, the EU’s AI Act continues to tighten, and Russia is charting its own path. Below is a comparative look at these evolving frameworks as of August 2025, highlighting their core philosophies, policy trends, and what they mean for global AI builders.

🇺🇸 The United States: Innovation First, Regulation Later

The U.S. remains focused on an innovation-first strategy. The America’s AI Action Plan (July 2025) signals a decisive move away from pre-emptive regulation, emphasizing innovation, infrastructure, and security diplomacy.

Key Pillars:

-

Removing regulatory “red tape”

-

Protecting free speech in frontier AI

-

Promoting open-source development

-

Allowing market forces to lead innovation

At the Paris AI Action Summit (Feb 2025), Vice President JD Vance reinforced that “excessive regulation could kill a transformative sector just as it’s taking off.”

Takeaway:

AI developers in the U.S. enjoy high flexibility, but they must align with emerging standards from NIST and sector-specific audits.

🇪🇺 The European Union: The Risk Manager’s Approach

The EU AI Act aims to become the world’s benchmark for AI safety and accountability through its risk-tiered model:

-

Unacceptable Risk: Banned (e.g., social scoring, manipulative AI)

-

High Risk: Subject to strict oversight

-

Limited Risk: Transparency rules

-

Minimal Risk: Largely free to operate

As of July 2025, the EU introduced new General-Purpose AI (GPAI) guidance, including:

-

Compute thresholds (≥10²⁵ FLOPs = presumed systemic risk)

-

Notification duties for large model providers

-

Open-source exemptions (unless deemed systemic risk)

-

Staggered enforcement through 2026

Takeaway:

Expect the most rigorous compliance demands. Documentation, compute tracking, and transparency are non-negotiable.

🇨🇳 China: State-Controlled Innovation

China continues to expand its vertically integrated AI governance model. The Interim Measures for Generative AI Services (Aug 2023) now work alongside new national standards (Nov 2025) and mandatory AI content labeling rules (Sept 2025).

Core Principles:

-

Vertical, sector-specific regulations

-

State monitoring of training data and outputs

-

Alignment with “core socialist values”

-

Focus on controlled innovation and consumer protection

Researchers call this approach “controlled care”—a balance between safety, ideology, and state oversight.

Takeaway:

Anyone targeting the Chinese market must design for traceability, state review, and ideological compliance.

🇷🇺 Russia: Strategic Sovereignty

Russia’s National Strategy for AI Development (2024) emphasizes technological independence and education-driven growth.

Key Policies:

-

Strengthening domestic compute and research infrastructure

-

Expanding AI education (9 schools in 2023 → 36 in 2025)

-

Boosting national prestige via AI Olympiads

Russia’s philosophy mirrors its geopolitical stance: AI as a tool for sovereignty and resilience.

Takeaway:

Expect tight government collaboration and security-centric design standards.

🌍 What It Means for Global AI Builders

| Region | Regulatory Style | Key Focus | Developer Advice |

|---|---|---|---|

| U.S. | Innovation-first | Freedom & market-led growth | Stay aligned with NIST; anticipate sector rules |

| EU | Risk management | Consumer protection & transparency | Prioritize compliance early |

| China | State-controlled innovation | Ideological oversight & traceability | Build compliant, explainable systems |

| Russia | Strategic sovereignty | National independence | Prepare for security reviews |

Conclusion:

The AI regulatory world is splitting into four major philosophies-innovation-led, risk-managed, state-controlled, and sovereignty-driven. For global AI builders, success will depend on mastering regulatory multilingualism-the ability to innovate responsibly under all four systems.

Author’s Note

If you enjoyed this breakdown, you can support my writing (and maybe buy me a coffee!) ☕ through Buy Me a Coffee. Let’s keep decoding AI policy together.